Explore our archive below

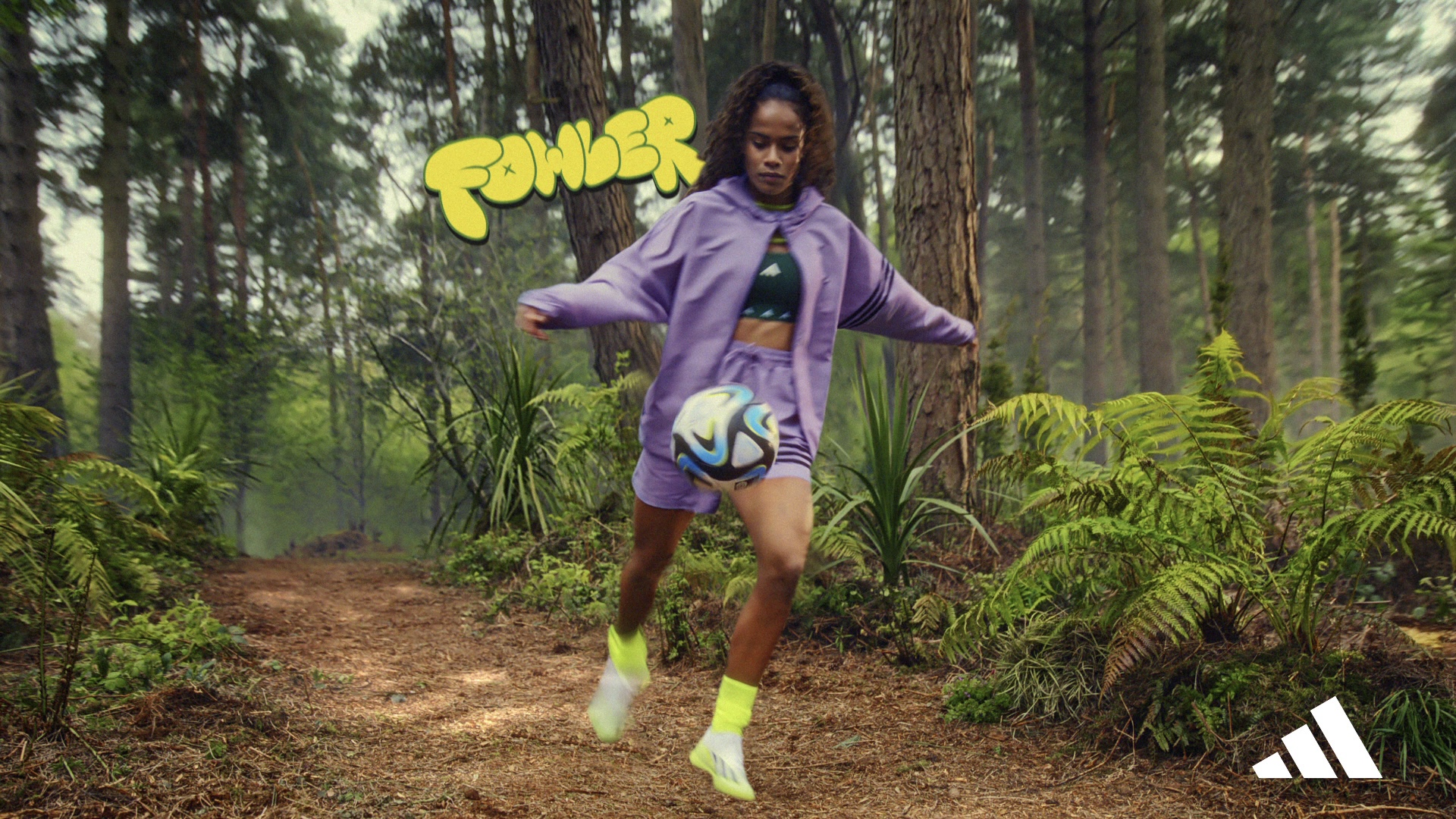

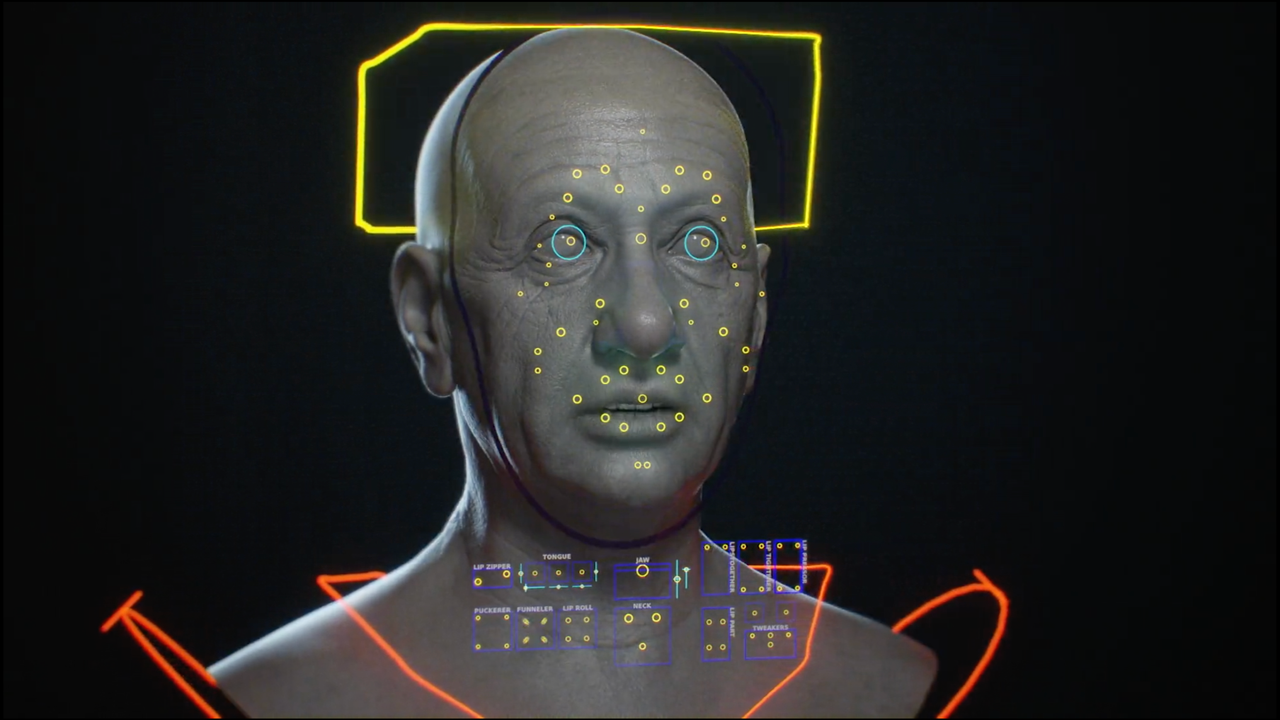

FC Online 24

Electronic Arts

Nothing Works

Declan Mckenna

Check In On Your Friends

Seize the Awkward

Creo 2

Specialized

Loki for The New Samsung Galaxy S23 Ultra

Samsung and Disney

Faith's Flowers

Amazon Business

Esto es Volar

Aeromexico

Roubaix SL8

Specialized

Driving While Black

Driving While Black

HEADSPACE

Benjamin Earl Turner

Tarmac SL8

Specialized

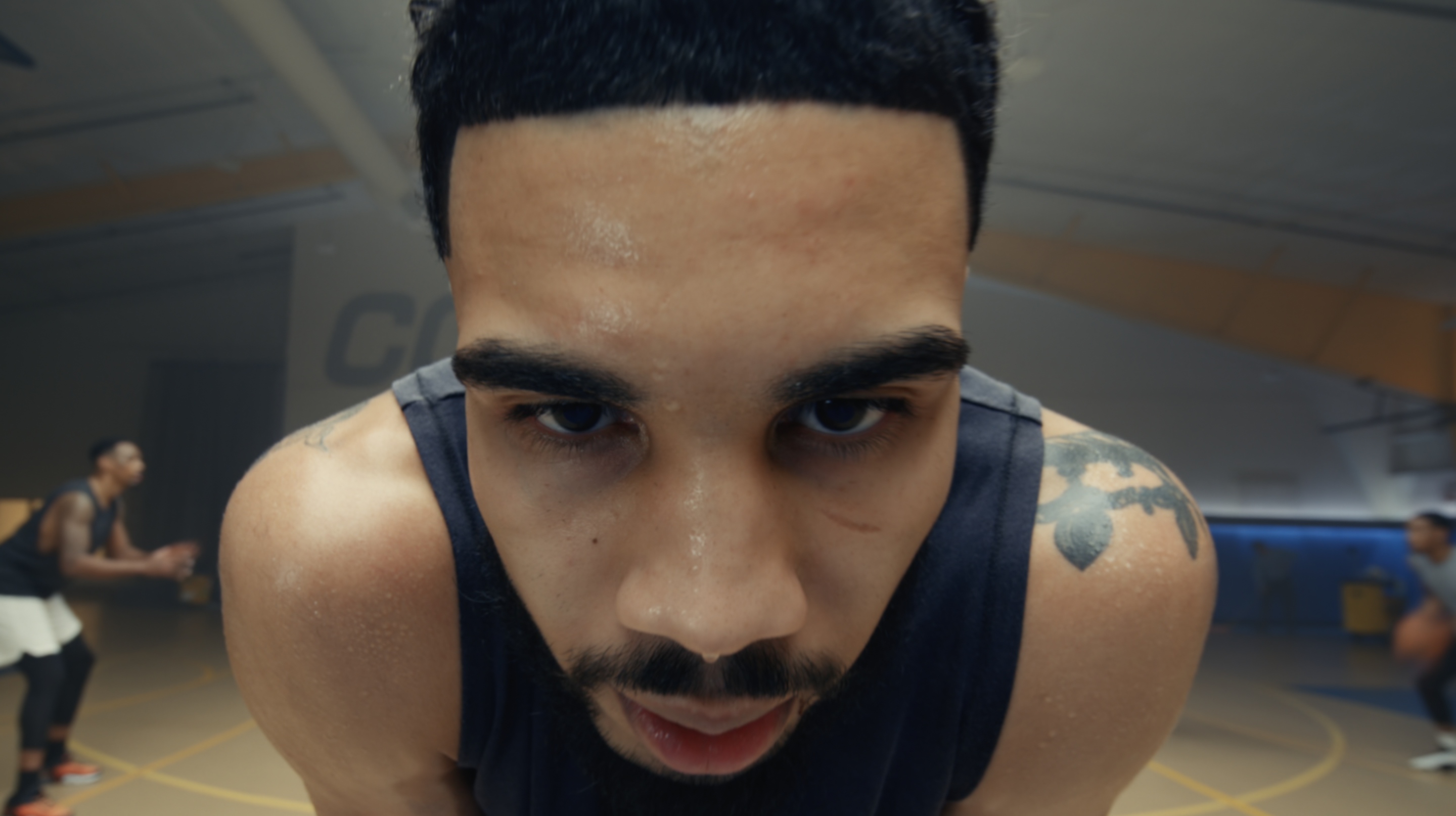

Rocky X Beats Studio Pro

Beats by Dre

Keep Pushing

The Hartford

Clap

Confused.com

Charlotte Tilbury featuring Bella Hadid

Charlotte Tilbury

Lady Khaki

Christian Louboutin

Diablo IV | Launch Live Action Trailer

Blizzard Entertainment

Rock Climbing

Pennsylvania Lottery

More of Life Brought to Life | Sneakers

The New York Times

G.O.A.T.

Strongbow Ultra

Keep Pushing (Teaser)

The Hartford

ElectroKinetic

Mercedes-Benz

Anything

Squarespace

Jeep | Electric Slide

Super Bowl 2023

Doritos | Jack's New Angle

Super Bowl 2023

Hellmann's | Hamm & Brie

Super Bowl 2023

E-Trade | Wedding

Super Bowl 2023

Popcorners | Breaking Good

Super Bowl 2023

Kia | Binky

Super Bowl 2023

YouTube | Cat

Super Bowl 2023

California Lottery | Power B-Ball

Super Bowl 2023

Amazon | Saving Sawyer

Super Bowl 2023

Busch Light | Shelter

Super Bowl 2023

Bud Light | Hold

Super Bowl 2023

DoorDash | Chef's Groceries

Super Bowl 2023

Remy Martin | Inch by Inch

Super Bowl 2023

Football | Director's Cut

WHOLLY® GUACAMOLE

National Notes

Electoral Comittee

FDR

The History Channel

Mayhem

Allstate

Mayhem

Allstate

NASCAR

NBC Sports

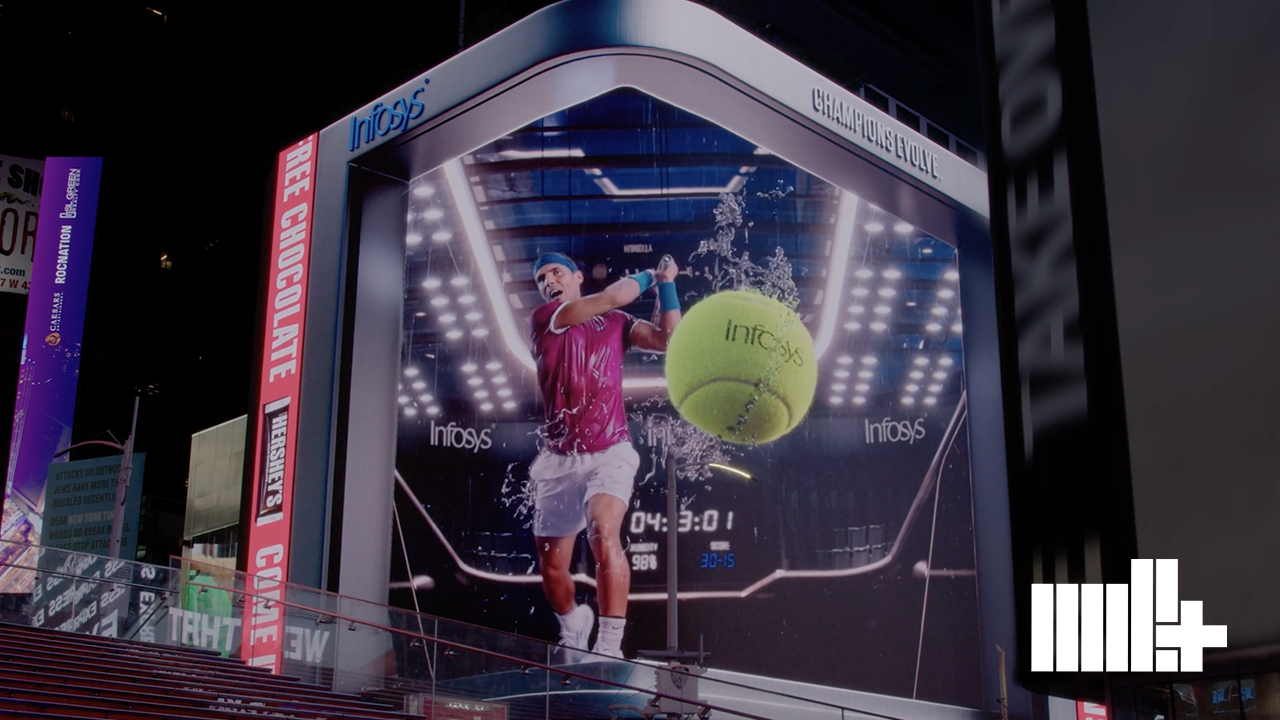

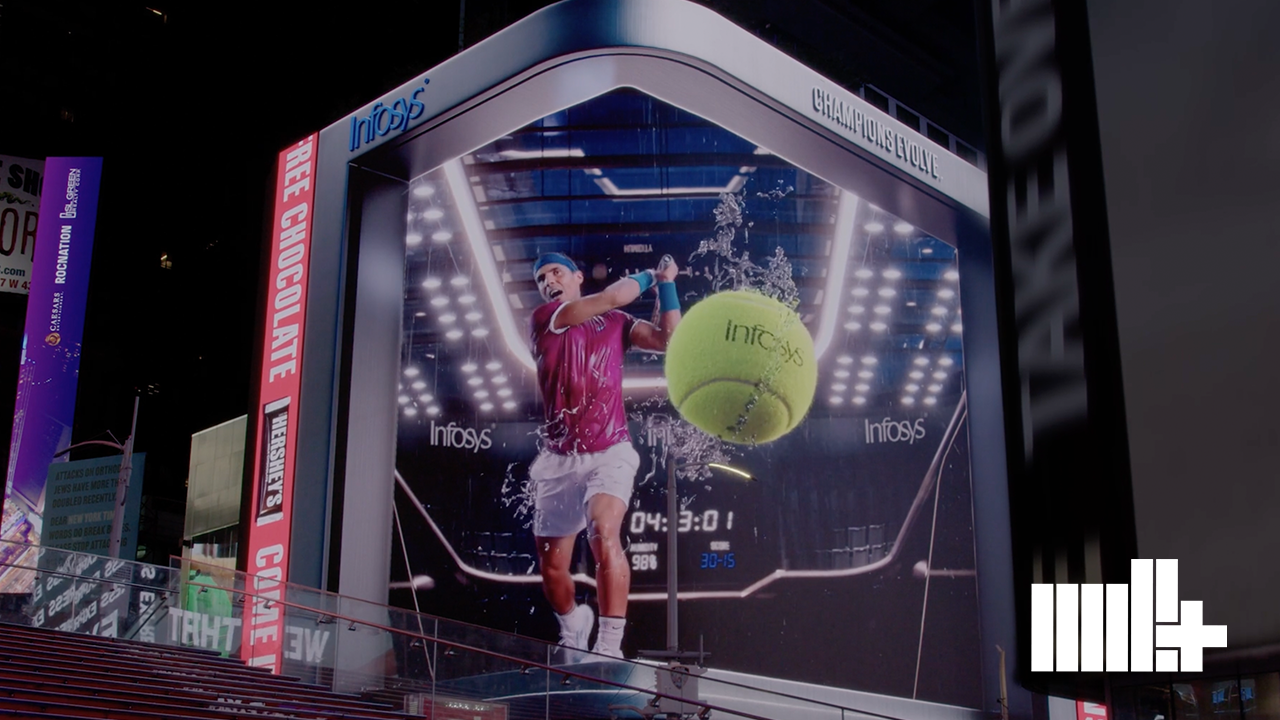

Tennis That Sees Past Gender

United Cup 2023

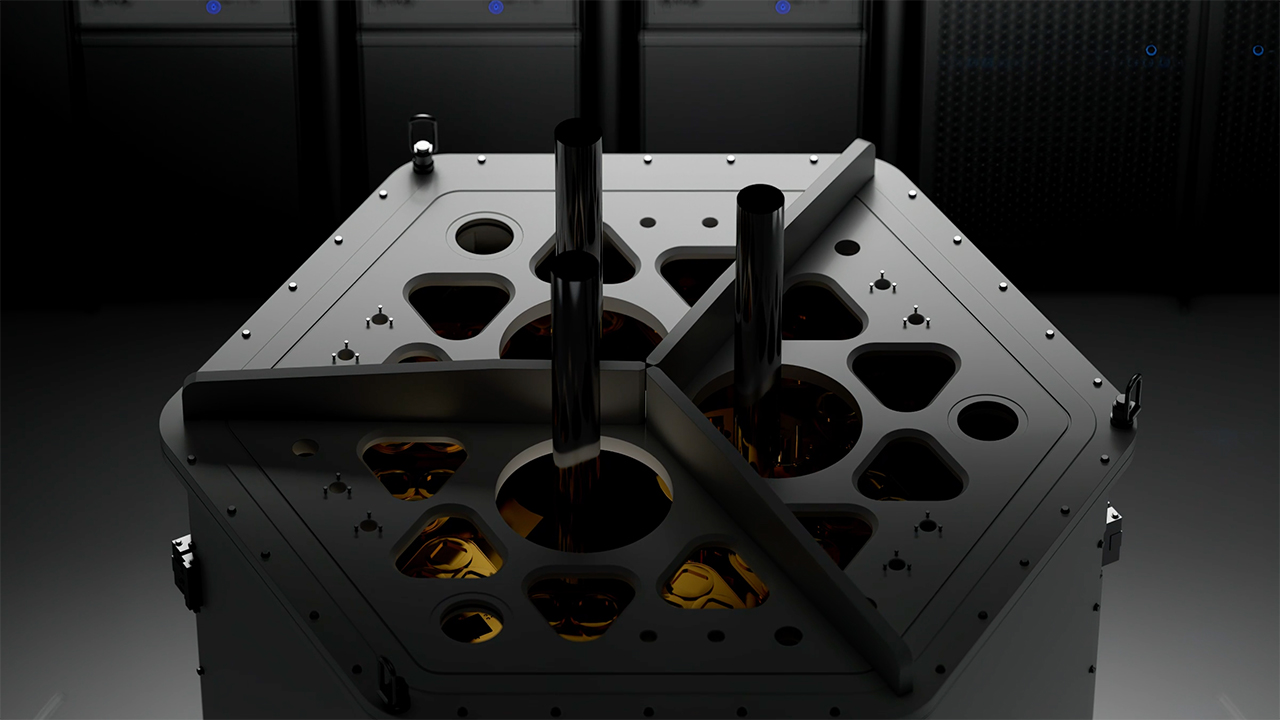

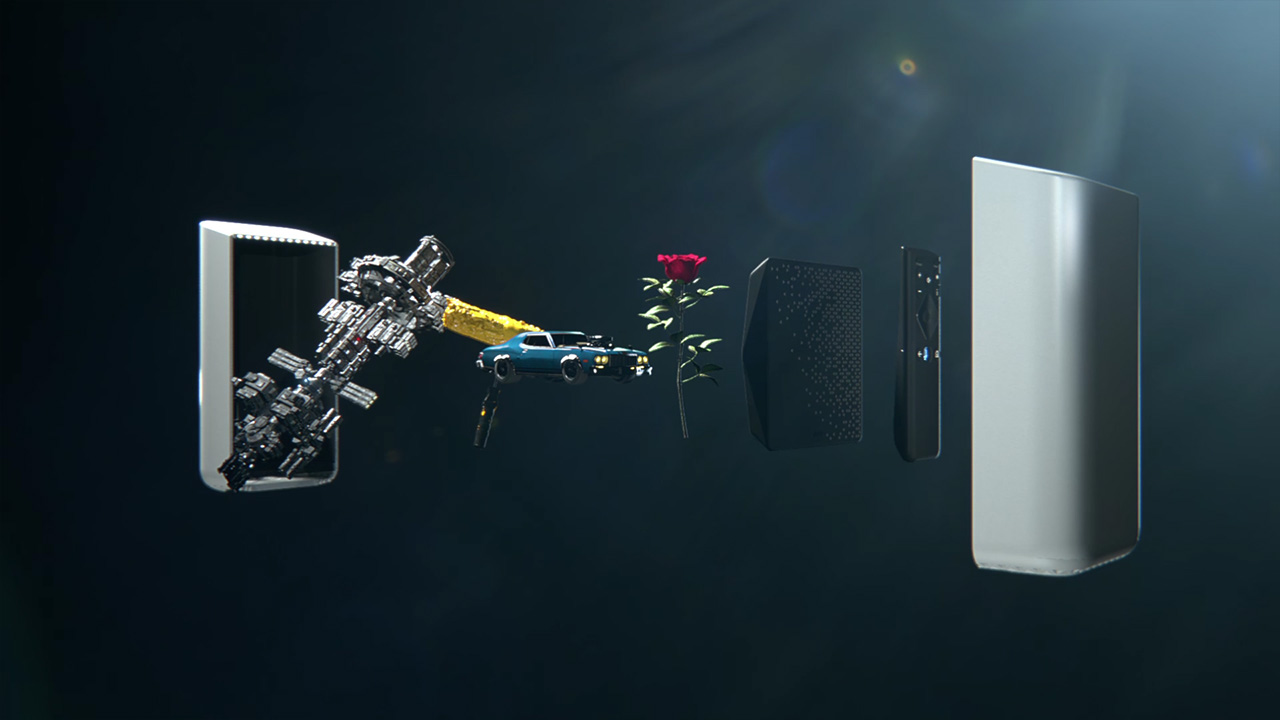

System Two

IBM Quantum

Osprey

IBM Quantum

The Sims | Pass the Spark

Electronic Arts

The March

SalesForce

Fairy & Duckie

Marks and Spencer

Taco Night

On The Rocks

LOL

Walmart

Vote Your Voice

Planned Parenthood

The Noisy Generation

British Heart Foundation

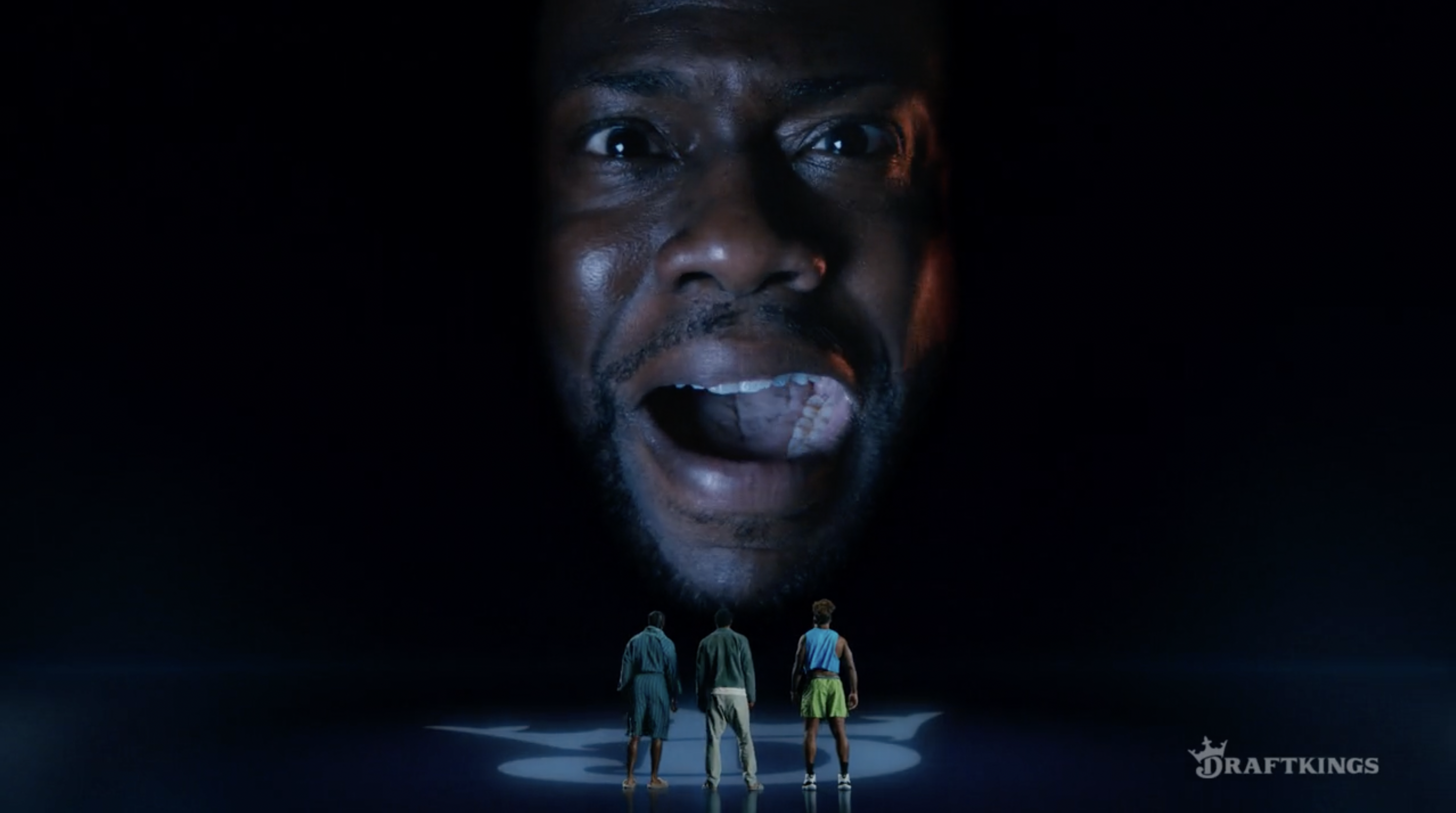

The Kevinverse

DraftKings

Look To Him

Greentea Peng

Levels to Play

Logitech G

Prepared For Life

Allianz Insurance

Scary Fast

Ford Raptor R

The Life Artois

Stella Artois

Flying Trucks

Deutsche Bahn

Night Drivers

Volkswagen Taos

Camp McDonald's

McDonald's

Usher x Remy Martin

Remy Martin

FIFA 23

EA SPORTS

Stay With Me

Calvin Harris, Justin Timberlake, Halsey, Pharrell

Clarity

TRY featuring EARTHGANG

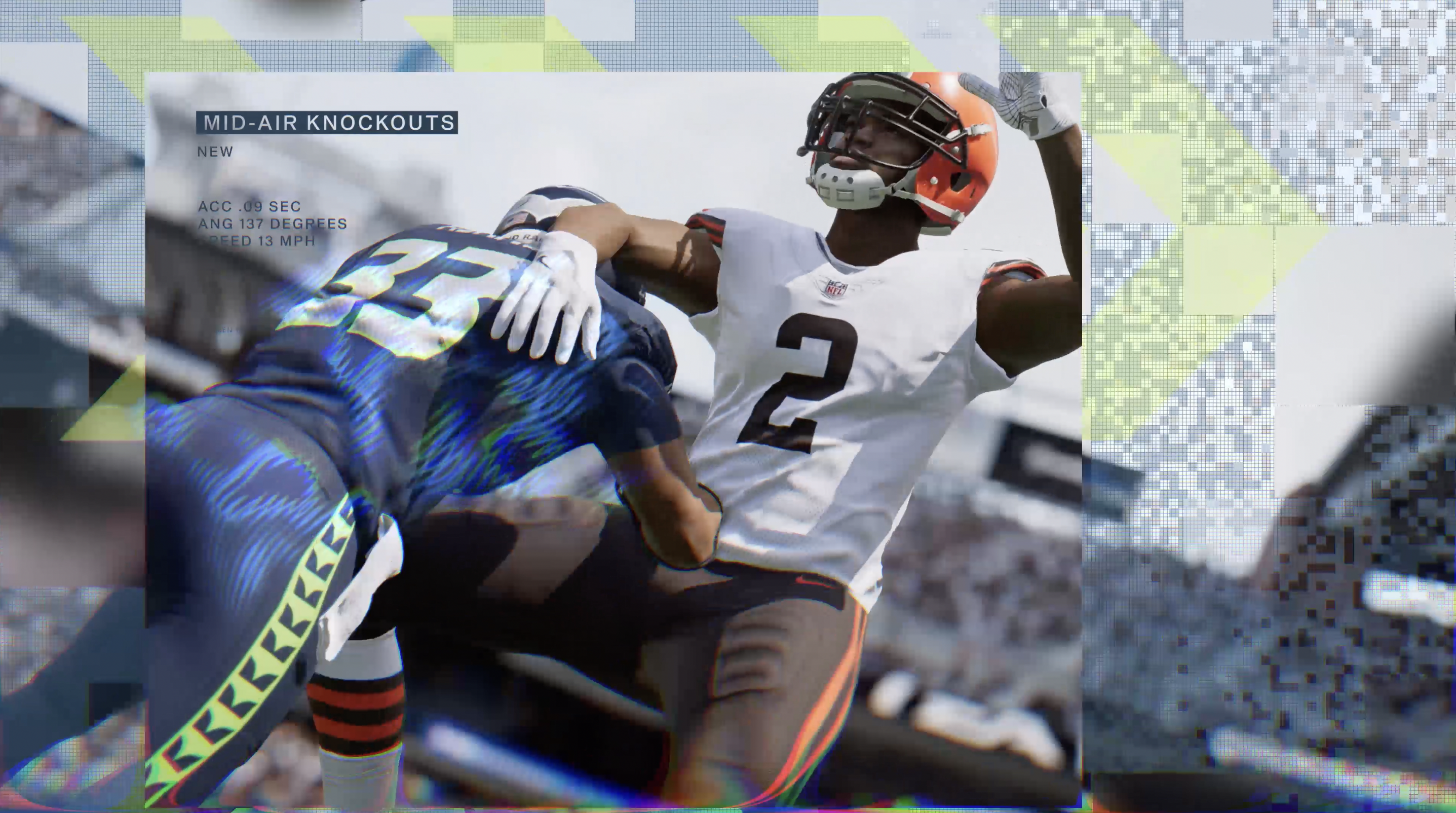

Madden '23 Game Trailer

EA Madden NFL

Prepared For Life

Allianz Insurance

Potion

Calvin Harris, Dua Lipa, Young Thug

Anthem Film

Ancient Nutrition

Sustainability

Ancient Nutrition

SBO Probiotics

Ancient Nutrition

SBO Collagen

Ancient Nutrition

Industry X

Accenture

Dark Mode

Beats by Dre

GOAT

Strongbow

Prime

Direct Line

Cryptoblades Kingdom

Cryptoblades

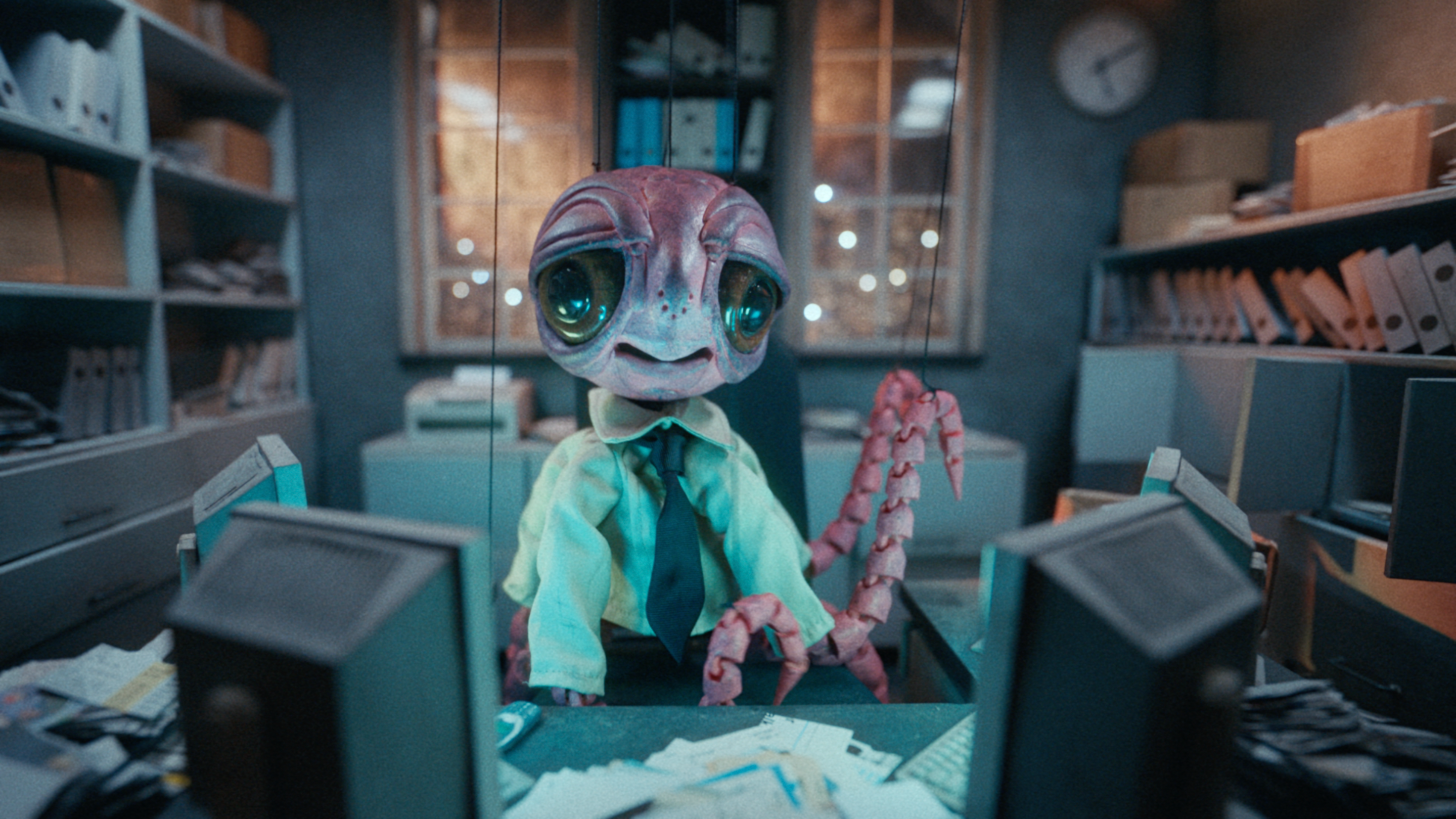

Office Tour

Blue Bunny

Neighbourhood

Enterprise

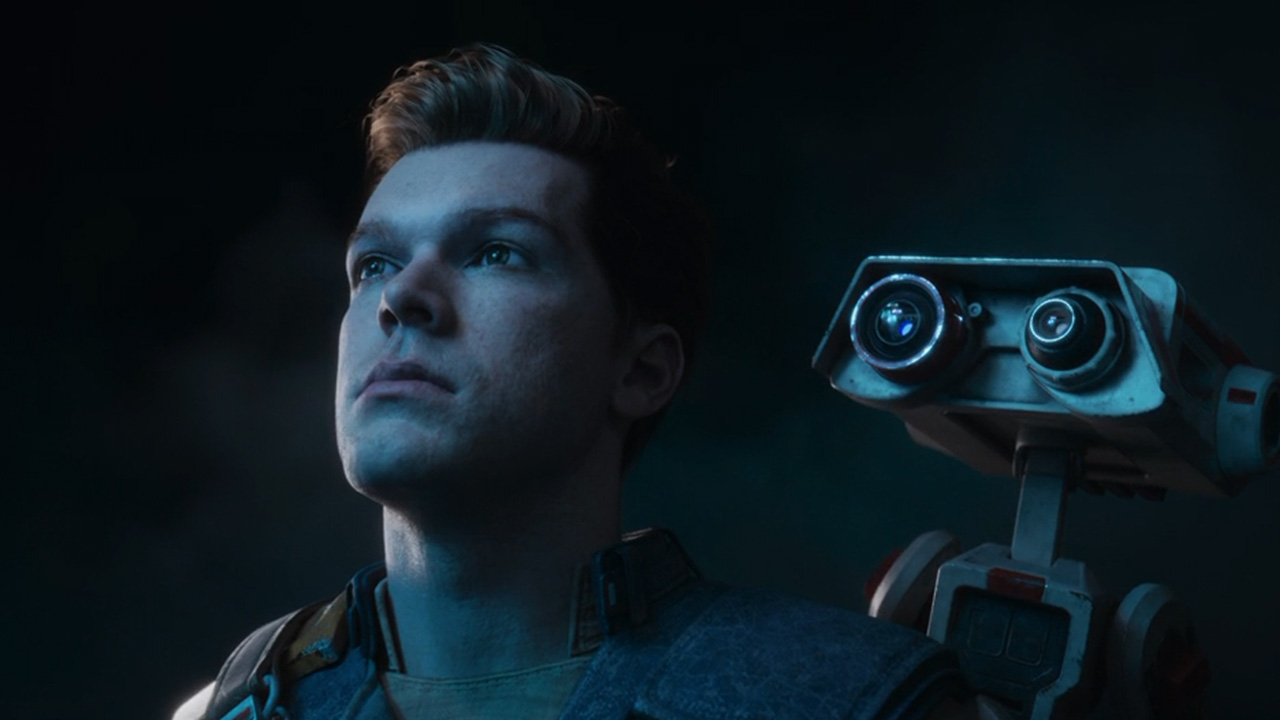

DEATH STRANDING

KOJIMA PRODUCTIONS

Spring Campaign 2022

Neiman Marcus

Storm

Paramount+

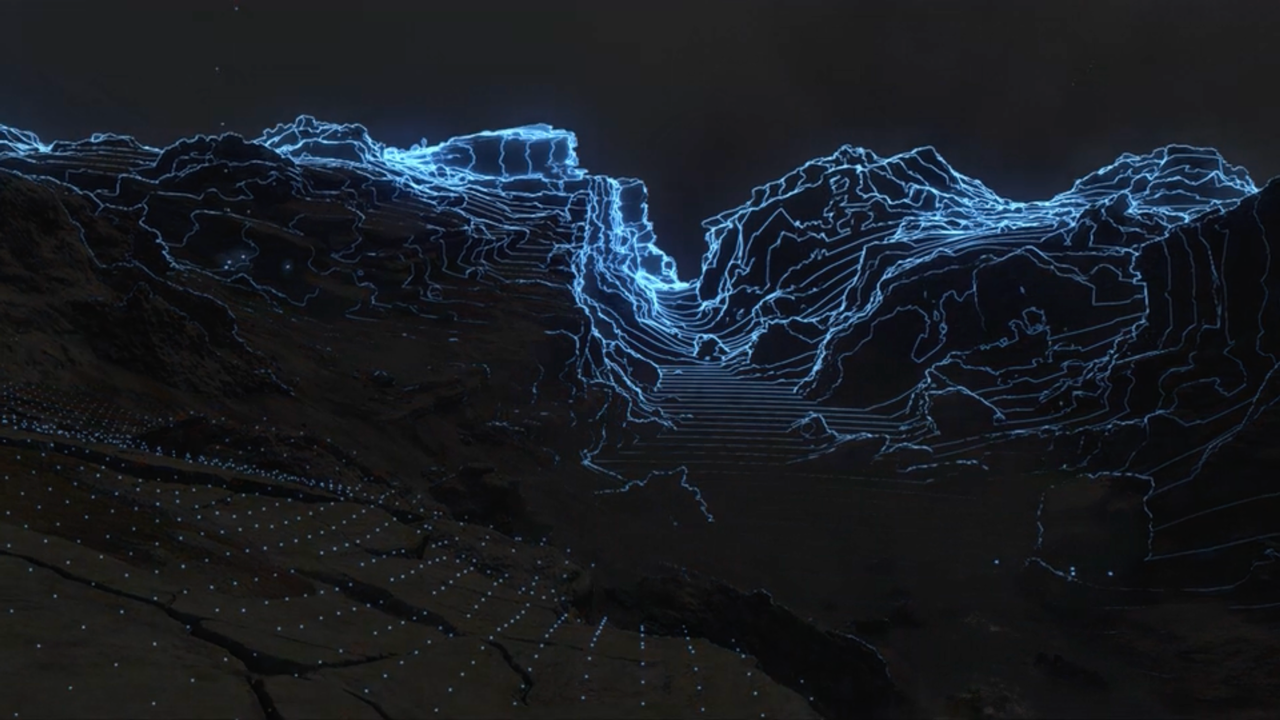

Cave Paintings

Paramount+

E-Trade | Off The Grid

Super Bowl 2022

Carvana | Oversharing Mom

Super Bowl 2022

Salesforce | The New Frontier

Super Bowl 2022

Intuit QuickBooks | Duality Duets

Super Bowl 2022

Greenlight | I'll Take It

Super Bowl 2022

Squarespace | Sally's Seashells

Super Bowl 2022

Vroom | Flake The Musical

Super Bowl 2022

Amazon | Mind Reader

Super Bowl 2022

Budweiser | A Clydesdale's Journey

Super Bowl 2022

Crypto.Com | Moment of Truth

Super Bowl 2022

Michelob ULTRA | Superior Bowl

Super Bowl 2022

Strange Choices

YouTube TV

Gong.io | Gong Ho

Super Bowl 2022

Busch Beer | Voice Of The Mountains

Super Bowl 2022

Toyota Tundra | The Joneses

Super Bowl 2022

AT&T | A Lot in Common

Super Bowl 2022

Subway | The Vault

Super Bowl Fan Experience

Nissan Presents | Thrill Driver

Super Bowl 2022

Chevy | New Generation

Super Bowl 2022

Taco Bell | The Grande Escape

Super Bowl 2022

Expedia | Stuff

Super Bowl 2022

Rocket Mortgage | Dream House

Super Bowl 2022

McDonald's | CanIGetUhhhh

Super Bowl 2022

DraftKings | Fortune

Super Bowl 2022

Independent | Vera

New York Times

Independent | Jordan

New York Times

Google x NBA

Google Pixel 6

Bonfire

Paramount+

FA21 | Deltas

Nike Jordan

Walter in the Winter

Chevy Silverado

Prism

Sensorium Galaxy

Head for the Mountains

Busch Light

Imagination

Wren Kitchens

Anthem Film

Hennessy VSOP

Dazed - Elementary

Nike x ACG

Shiny Robot

YouTube Kids

Sims: Find Yourselves | Mealtime

Electronic Arts

Africa Direct

Al Jazeera

An Unlikely Friendship

Amazon Prime

Imposters

San Diego Zoo

Autumn

Carhartt

Teaser

Sea Mirror

Holiday Campaign 2021

Neiman Marcus

Rye

Elijah Craig

Brand Film

Sea Mirror

Set Yourself Free

Starling Bank

Advanced Night Repair

Estee Lauder

Come DIrect

Direct Line

Spring 2022

Edeline Lee

PSA | In the Spotlight

Stuttering Association for the Young

Easiest Decision

Capital One

Our House, Your House

Los Angeles Rams

Custom Chariot

Mercedes-Benz x Cinderella

Back On Track

National Rail

Tools

Fetch.com

Fall Campaign 2021

Neiman Marcus

Feel

Lululemon

One Up

Xfinity

Reese's Extreme

Dairy Queen

Oh Henry

Dairy Queen

Cat

Chevy Silverado

Train Body & Mind

NordicTrack

New Football

Nike Football

Valorant

Riot Games

Dungeons & Dragons: Dark Alliance | Cinematic Trailer

Wizards of the Coast

Honey Dijon

Calvin Klein

Watch Me

Ford Mustang

See Us

The Asian American Foundation

Einstein's New Lights

Smart Energy

Einstein's Race Track

Smart Energy

Einstein Loves Wind

Smart Energy

Einstein Knows Best BTS

Smart Energy